Jyothir S VI am a Jr. Research Scientist at the Center for Data Science at New York University, where I am advised by Yann LeCun and Kyunghyun Cho. My research interests lie at the intersection of reinforcement learning, self-supervised learning, humanoid control and enhancing mathematical reasoning capabilities within large language models. I completed my undergraduate studies at the Indian Institute of Technology (IIT) Mandi located in Himalayas, where I conducted research under the mentorship of Aditya Nigam. Please feel free to contact me: jyothir -at- nyu.edu Email / GitHub / Google Scholar / LinkedIn |

|

Research |

|

Hierarchical World Models as Visual Whole-Body Humanoid ControllersNicklas Hansen, Jyothir S V, Vlad Sobal, Yann LeCun, Xiaolong Wang, Hao Su ICLR 2025, CoRL Workshop on Whole-body Control and Bimanual Manipulation 2024 arxiv / code / In this paper, we introduce a hierarchical world model for whole-body humanoid control that learns dynamics from human motion captured data. We also propose a novel task suite for evaluation and highlight the effectiveness of our model through various experiments. |

|

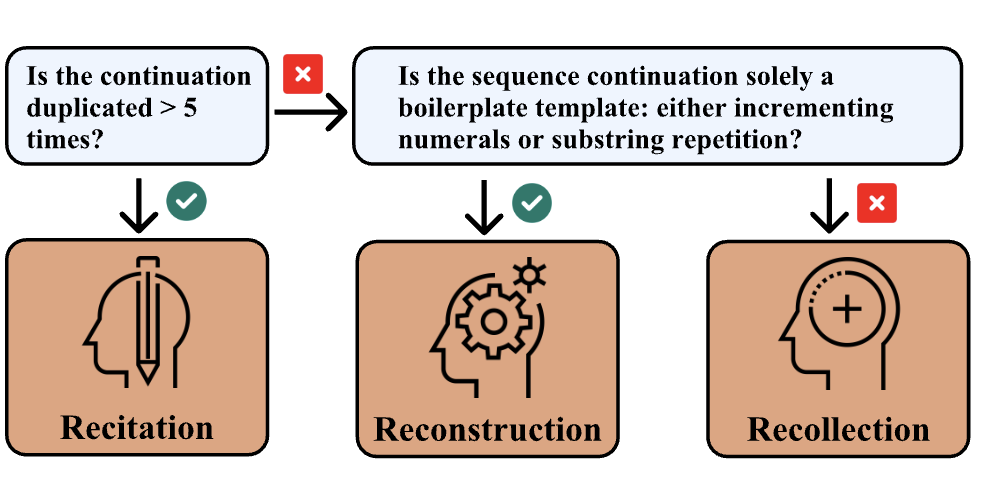

Recite, Reconstruct, Recollect: Memorization in LMs as a Multifaceted PhenomenonUSVSN Sai Prashanth*,..., Jyothir S V*,..., Katherine Lee, Naomi Saphra ICLR 2025, EMNLP Finding 2024 arxiv / code / In this work, we propose a taxonomy for memorization in language models, breaking it down into recitation, reconstruction, and recollection. We demonstrate the taxonomy’s utility by constructing predictive models for memorization and analyzing dependencies that differ across categories and features. |

|

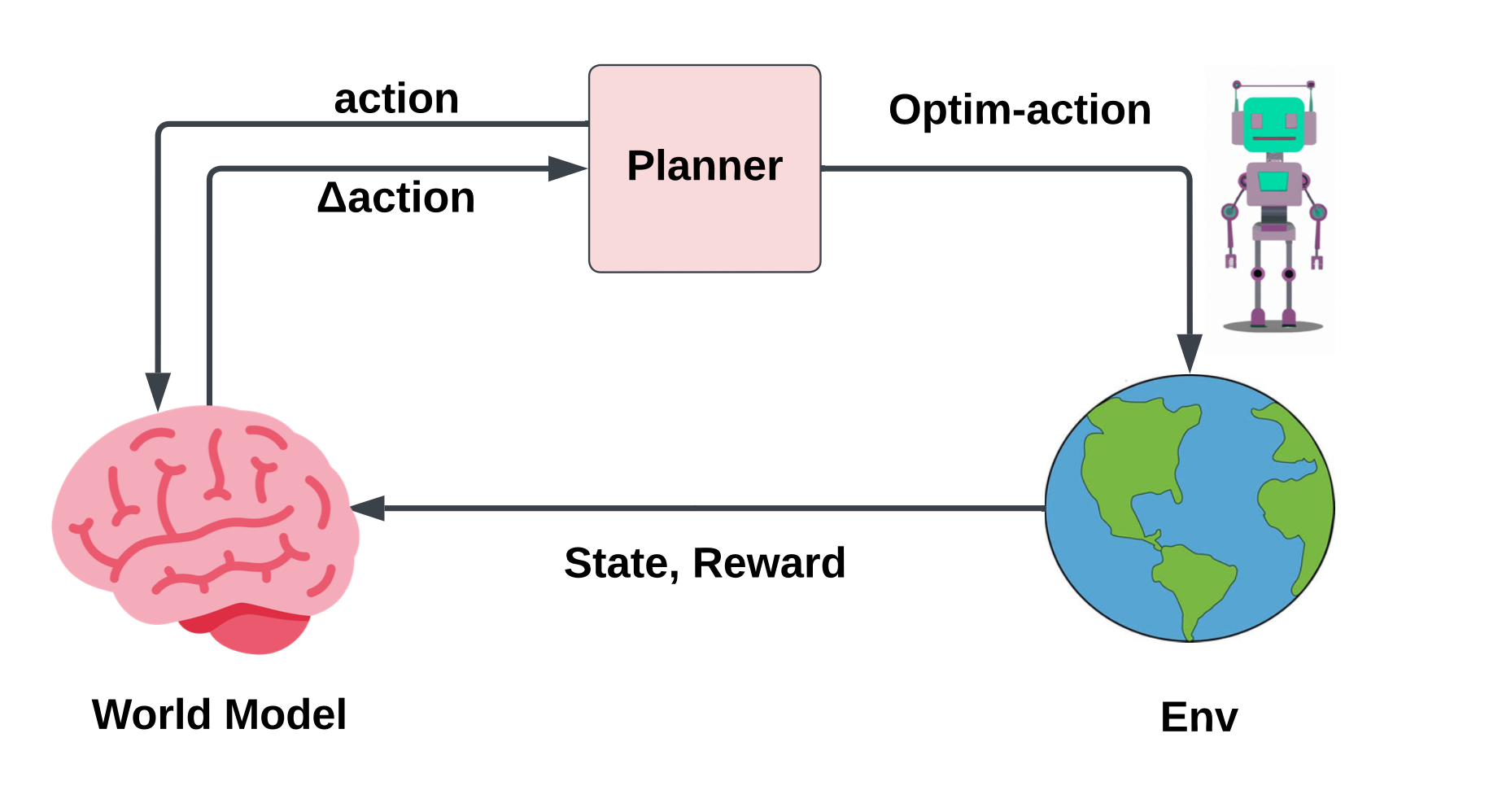

Gradient-based Planning with World ModelsJyothir S V, Siddhartha Jalagam, Yann LeCun, Vlad Sobal ICLR Generative Models for Decision Making Workshop 2024 arxiv / code / Most model predictive control (MPC) algorithms designed for visual world models have traditionally explored gradient-free population-based optimization methods, such as Cross Entropy and Model Predictive Path Integral (MPPI) for planning. We present an exploration of a gradient-based alternative that fully leverages the differentiability of the world model. |

|

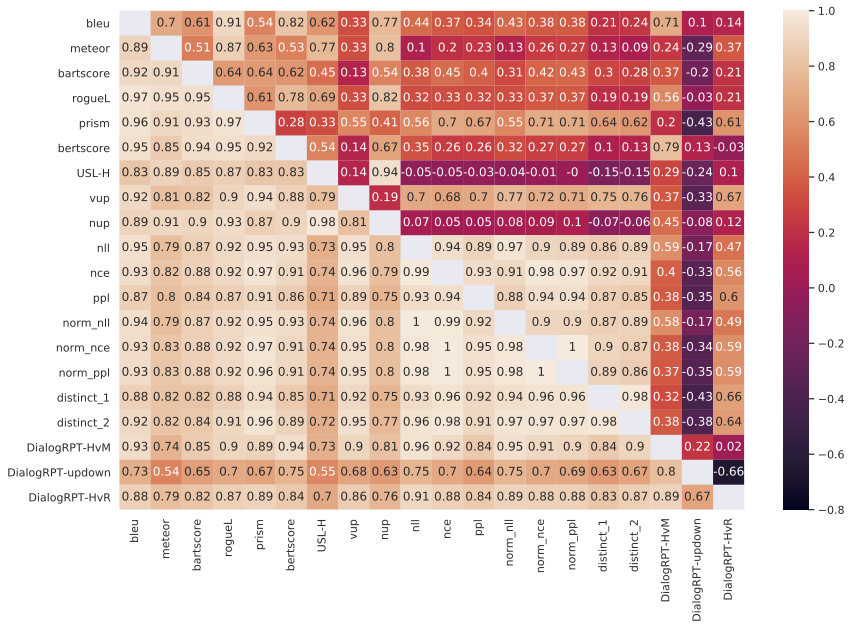

A Massively Multi-System MultiReference Data Set for Dialog Metric EvaluationHuda Khayrallah, Zuhaib Akhtar, Edward Cohen, Jyothir S V , João Sedoc EMNLP Findings 2024 arxiv / Automatic metrics for dialogue evaluation should be robust proxies for human judgments; however, the verification of robustness is currently far from satisfactory. To quantify the robustness correlation and understand what is necessary in a test set, we create and release an 8-reference dialog datase. We then train 1750 systems and evaluate them and publicly available large models on our novel test set and the DailyDialog dataset. |

|

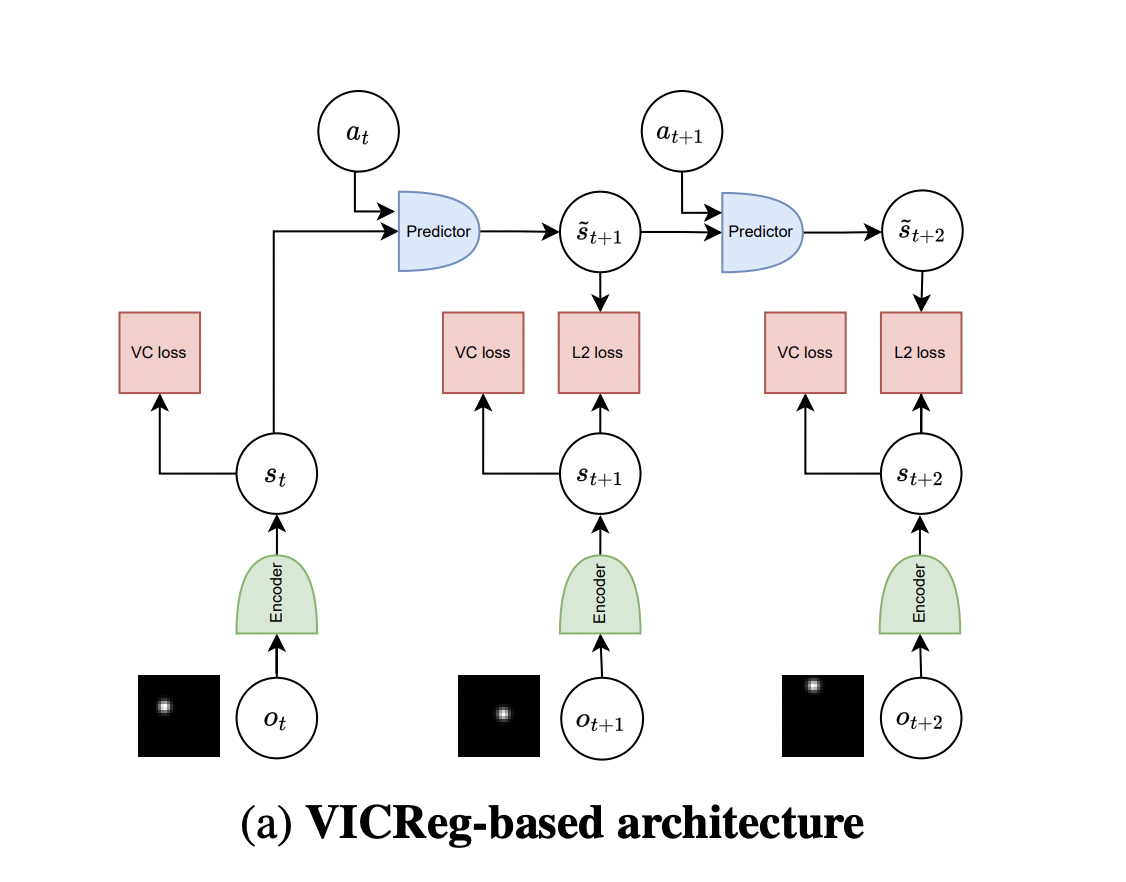

Joint Embedding Predictive Architectures Focus on Slow FeaturesVlad Sobal, Jyothir S V, Siddhartha Jalagam, Nicholas Carion, Kyunghyun Cho, Yann Lecun NeurIPS SSL Workshop 2022 arxiv / code / In this work, we analyze performance of JEPA trained with VICReg and SimCLR objectives in the fully offline setting without access to rewards, and compare the results to the performance of the generative architecture. |

|

Design and source code from Jon Barron's website |